- Home

- News

- 2019 Three predictions about data centers Silicon light will be the core of module development

2019 Three predictions about data centers Silicon light will be the core of module development

As we all know, the technology industry has achieved many extraordinary achievements in 2018, and there will be various possibilities in 2019, which is long-awaited.Inphi’s chief technology officer, Dr. Radha Nagarajan, believes that the high-speed data center interconnect (DCI) market, one of the technology industry segments, will also change in 2019. Here are three things he expects to happen in the data center this year.

1.The geographic decomposition of data centers will become more common

Data center consumption requires a lot of physical space support, including infrastructure such as power and cooling.Data center geo-decomposition will become more common as it becomes more and more difficult to build large, continuous, large data centers.Decomposition is key in metropolitan areas where land prices are high. Large bandwidth interconnects are critical to connecting these data centers.

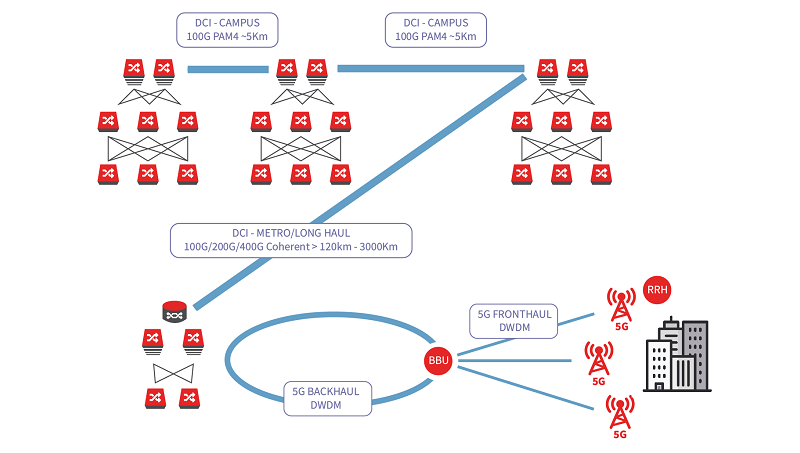

DCI-Campus:These data centers are often connected together, for example in a campus environment. The distance is usually limited to between 2 and 5 kilometers.Depending on the availability of the fiber, there is also an overlap of CWDM and DWDM links at these distances.

DCI-Edge:This type of connection ranges from 2 km to 120 km.These links are primarily connected to distributed data centers within the area and are typically subject to latency constraints.DCI optical technology options include direct detection and coherence, both of which are implemented using the DWDM transmission format in fiber-optic C-band (192 THz to 196 THz window).The direct detection modulation format is amplitude modulated, has a simpler detection scheme, consumes lower power, lower cost, and requires external dispersion compensation in most cases.For 100 Gbps, 4-level pulse amplitude modulation (PAM4), the direct detection format is a cost-effective method for DCI-Edge applications.The PAM4 modulation format has twice the capacity of the traditional non-return-to-zero (NRZ) modulation format.For the next generation of 400-Gbps (per wavelength) DCI systems, the 60-Gbaud, 16-QAM coherent format is the leading competitor.

DCI-Metro/Long Haul:This category of fiber is beyond the DCI-Edge, with a ground link of up to 3,000 kilometers and a longer sea floor.A coherent modulation format is used for this category and the modulation type can be different for different distances.The coherent modulation format is also amplitude and phase modulated, requires local oscillator lasers for detection, requires complex digital signal processing, consumes more power, has a longer range, and is more expensive than direct detection or NRZ methods.

2.The data center will continue to develop

Large bandwidth interconnects are critical to connecting these data centers.With this in mind, DCI-Campus, DCI-Edge and DCI-Metro/Long Haul data centers will continue to develop.In the past few years, the DCI field has become the focus of attention of traditional DWDM system suppliers.The growing bandwidth requirements of cloud service providers (CSPs) that provide software-as-a-service (SaaS), platform-as-a-service (PaaS) and infrastructure-as-a-service (IaaS) capabilities are driving different optical systems for connecting CSP data center networks Layer switches and routers.Today, this needs to run at 100 Gbps. Inside the data center, direct-attached copper (DAC) cabling, active optical cable (AOC) or 100G “gray” optics can be used.For connections to data center facilities (campus or edge/metro applications), the only option that has only recently been available is a full-featured, coherent-based repeater-based approach that is sub-optimal.

With the transition to a 100G ecosystem, the data center network architecture has evolved from a more traditional data center model.All of these data center facilities are located in a single large “large data center” campus.Most CSPs have been fused to a distributed area architecture to achieve the scale required and provide highly available cloud services.

Data center areas are typically located near metropolitan areas with high population densities to provide the best service (with delay and availability) to the end customers closest to these areas.The regional architecture is slightly different between CSPs, but consists of redundant regional “gateways” or “hubs”.These “gateways” or “hubs” are connected to the CSP’s wide area network (WAN) backbone (and edge sites that may be used for peer-to-peer, local content transport or submarine transport).These “gateways” or “hubs” are connected to the CSP’s wide area network (WAN) backbone (and edge sites that may be used for peer-to-peer, local content transport or submarine transport).Since the area needs to be expanded, it is easy to procure additional facilities and connect them to the regional gateway.This allows for rapid expansion and growth of the area compared to the relatively high cost of building a new large data center and a longer construction time, with the added benefit of introducing the concept of different available areas (AZ) in a given area.

The transition from a large data center architecture to a zone introduces additional constraints that must be considered when selecting gateway and data center facility locations.For example, to ensure the same customer experience (from a latency perspective), the maximum distance between any two data centers (through a public gateway) must be bounded.Another consideration is that the gray optical system is too inefficient to interconnect physically distinct data center buildings within the same geographic area. With these factors in mind, today’s coherent platform is not suitable for DCI applications.

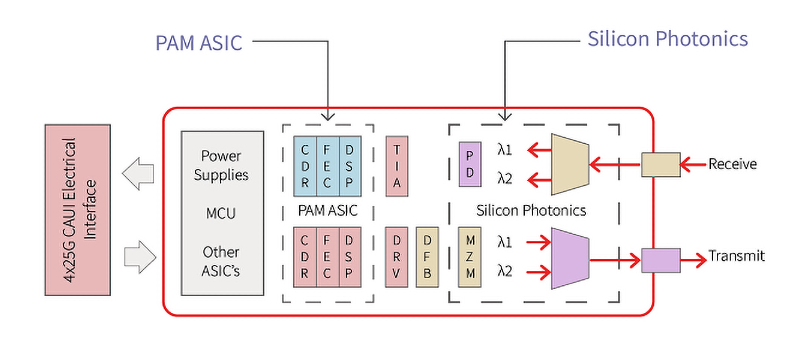

The PAM4 modulation format provides low power consumption, low footprint, and direct detection options.By utilizing silicon photonics, a dual-carrier transceiver with a PAM4 Application Specific Integrated Circuit (ASIC) was developed, integrating an integrated digital signal processor (DSP) and forward error correction (FEC).And package it into the QSFP28 form factor. The resulting switch pluggable module can perform DWDM transmission over a typical DCI link, with 4 Tbps per fiber pair and 4.5 W per 100G.

3.Silicon photonics and CMOS will become the core of optical module development

The combination of silicon photonics for highly integrated optics and high-speed silicon complementary metal oxide semiconductors (CMOS) for signal processing will play a role in the evolution of low-cost, low-power, switchable optical modules.

The highly integrated silicon photonic chip is the heart of the pluggable module.Compared to indium phosphide, the silicon CMOS platform is able to enter wafer-level optics at larger 200 mm and 300 mm wafer sizes.Photodetectors with wavelengths of 1300 nm and 1500 nm were constructed by adding germanium epitaxy on a standard silicon CMOS platform.In addition, silicon dioxide and silicon nitride based components can be integrated to fabricate low refractive index contrast and temperature insensitive optical components.

In Figure 2, the output optical path of the silicon photonic chip contains a pair of traveling wave Mach Zehnder modulators (MZM), one for each wavelength.The two wavelength outputs are then combined on a chip using an integrated 2:1 interleaver, which acts as a DWDM multiplexer.The same silicon MZM can be used in both NRZ and PAM4 modulation formats with different drive signals.

As the bandwidth requirements of data center networks continue to grow, Moore’s Law requires advances in switching chips. This will enable the switch and router platforms to maintain switch chip base parity while increasing the capacity of each port.Next-generation switch chips are designed for each port of the 400G.A project called 400ZR was launched in the Optical Internet Forum (OIF) to standardize next-generation optical DCI modules and create a diverse optical ecosystem for suppliers.This concept is similar to WDM PAM4, but extends to support 400-Gbps requirements.